Writing production-ready ETL pipelines in Python / Pandas

Why take this course?

🎓 Course Title: Writing Production-Ready ETL Pipelines in Python

Unlock the Power of Data with Expert Python Skills! 🚀

Welcome to our comprehensive course on writing production-ready ETL (Extract, Transform, Load) pipelines using Python and the latest data engineering tools. This course is designed for professionals who aspire to master the art of data pipeline development, ensuring your projects are efficient, scalable, and robust.

Course Instructor: Jan Schwarzlose 👩🏫

Course Overview:

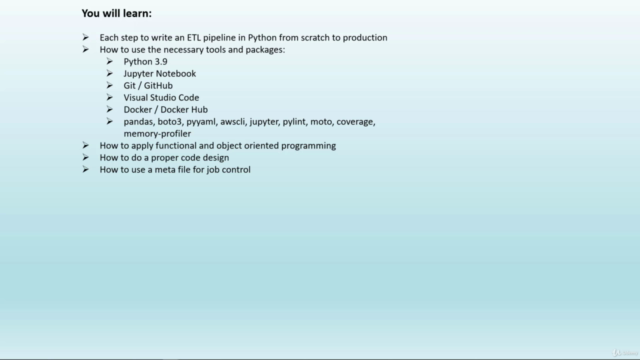

In this course, you'll embark on a journey through the life cycle of an ETL pipeline, from conception to deployment in a production environment. You'll gain hands-on experience with tools and libraries such as:

- Python 3.9

- Jupyter Notebook

- Git & Github

- Visual Studio Code

- Docker & Docker Hub

- Pandas, boto3, pyyaml, awscli (and many more!)

Key Learning Points:

-

Functional vs. Object-Oriented Programming in Data Engineering contexts.

-

Best Practices in Python Development:

- Design principles and clean coding

- Virtual environments setup

- Project/folder organization

- Configuration management

- Effective logging

- Robust exception handling

- Code linting and formatting

- Dependency management

- Performance tuning with profiling

- Unit and integration testing

- Containerization with Docker

- Continuous deployment strategies

Real-World Application:

Throughout the course, you'll apply these concepts to a real dataset from Xetra, a platform of the Deutsche Börse Group. You'll extract data from an AWS S3 bucket, transform it as needed, and load the results into another S3 bucket – all within a schedulable pipeline.

Production-Ready Pipeline:

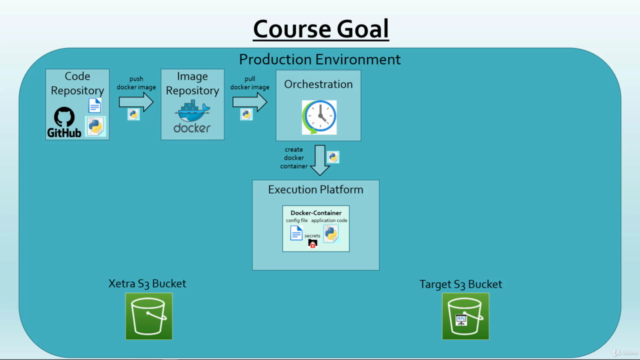

The ETL pipeline you'll create will be designed to deploy easily in a production environment that supports containerized applications. We'll cover the entire stack, including:

- GitHub for code versioning

- DockerHub for container image storage and distribution

- Kubernetes as an execution platform

- Argo Workflows or Apache Airflow for orchestration

What You'll Gain:

- Interactive, Practical Lessons: Code alongside real-world scenarios.

- Complete Project Access: Review the entire project on GitHub.

- Ready-to-Use Docker Image: Utilize a pre-configured Docker image with application code on Docker Hub.

- Detailed Slides and Documentation: Download slides for each theoretical lesson and receive additional resources for deep dives into specific topics.

Course Structure:

-

Introduction to ETL Concepts 📐

- Understanding the ETL workflow

- Introduction to the Xetra dataset

-

Setting Up Your Development Environment 🛠️

- Installing necessary tools and libraries

- Configuring your IDE (Visual Studio Code)

-

Writing the ETL Pipeline ✍️

- Extracting data from AWS S3

- Transforming data with Pandas and Python packages

- Loading transformed data to another AWS S3 bucket

-

Best Practices in Python Development 🏗️

- Applying design principles and clean coding

- Managing dependencies and environments

- Ensuring code quality with linting, testing, and profiling

-

Containerization and Deployment 🐉

- Dockerizing your ETL pipeline

- Deploying to a production environment using GitHub, DockerHub, Kubernetes, and Argo Workflows/Apache Airflow

-

Real-World Application and Case Studies 🌍

- Analyzing real ETL pipelines in the industry

- Troubleshooting common issues and optimizing performance

-

Final Project: Building Your Own ETL Pipeline 🏋️♂️

- Applying all the concepts learned throughout the course

- Creating a fully functional, production-ready ETL pipeline for the Xetra dataset

Join us on this data engineering adventure and elevate your Python skills to new heights! 🌟

Ready to transform your data engineering skills? Enroll in this course today and become a master of ETL pipelines with Python!

Course Gallery

Loading charts...

Comidoc Review

Our Verdict

This course offers valuable insights into writing ETL pipelines using Python, pandas, and Data Engineering best practices. Though it lacks detailed explanations for a few concepts and has some areas with minimal coverage, the opportunity to learn from a real-world project outweighs these weaknesses. Considering its recent updates in July 2022, students can benefit from a production-ready pipeline perspective and apply this knowledge to their projects.

What We Liked

- Covers end-to-end ETL pipeline development using best practices in Python and Data Engineering.

- Provides an opportunity to learn from a real-world project and see the instructor's thinking process.

- Incorporates functional programming, object-oriented code design, and a meta file for job control.

- Exposes students to pandas and Windowed SQL functions, allowing them to implement ETL pipelines in Python.

Potential Drawbacks

- Lacks detailed explanations for some concepts, causing the need for self-driven research.

- Expectations regarding testing and productionizing the pipeline may not be fully met.

- Object-oriented approach in certain parts might introduce unnecessary complexity to basic tasks.

- Minimal coverage provided on deploying pipelines and AWS/Kubernetes setup, leaving some students disappointed.