Spark Project on Cloudera Hadoop(CDH) and GCP for Beginners

Why take this course?

🛠️ Course Title: Spark Project on Cloudera Hadoop (CDH) and GCP for Beginners

🚀 Headline: Building Data Processing Pipeline Using Apache NiFi, Apache Kafka, Apache Spark, Cassandra, MongoDB, Hive, and Zeppelin

Are you ready to dive into the world of big data processing? In the ever-evolving landscape of retail business, both brick-and-mortar stores and eCommerce platforms generate a colossal amount of data in real-time. This course is your gateway to turning this influx of information into actionable insights!

Course Description:

Retail businesses are swimming in a sea of data, and the key to success lies in the ability to process and analyze this data swiftly and effectively. With the advent of technology, we've moved beyond traditional data handling methods. The modern retail environment demands real-time processing capabilities to cater to consumer trends and behaviors instantaneously.

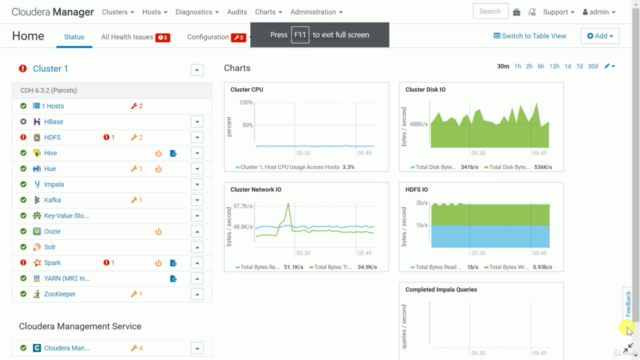

To meet these demands, we're going to build a robust Data Processing Pipeline that harnesses the power of:

- 🔗 Apache NiFi: A versatile data routing and automation tool to orchestrate your data flows.

- ⚡️ Apache Kafka: A high-throughput, low-latency platform for handling real-time data feeds.

- 🚀 Apache Spark: An open-source unified analytics engine capable of processing massive datasets in a distributed environment.

- 📊 Cassandra and MongoDB: Scalable NoSQL databases that handle diverse data types and structures.

- 📚 Apache Hive: A data warehouse software project built on top of Hadoop for providing data summarization and ad hoc querying.

- 📝 Apache Zeppelin: An interactive notebook platform for sharing and collaborating on data-intensive workflares.

What You Will Learn:

- The fundamentals of big data processing and the architecture behind it.

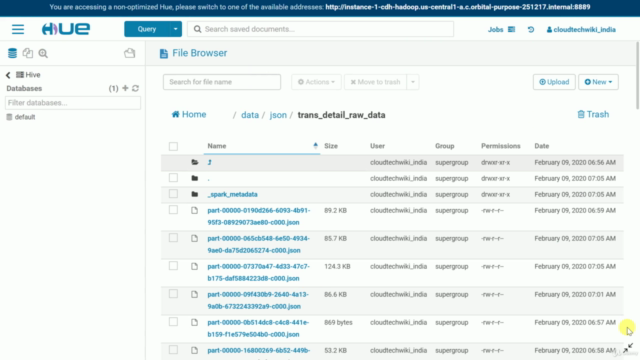

- How to set up a Cloudera Hadoop (CDH 6.3) cluster on Google Cloud Platform (GCP).

- Programming with Apache Spark using Scala and PySpark.

- Real-time data ingestion and stream processing using Apache Kafka.

- Designing and implementing ETL (Extract, Transform, Load) processes with Apache NiFi.

- Storing, managing, and querying large datasets using Apache Cassandra and MongoDB.

- Performing complex data analysis and generating insights with Apache Hive and Zeppelin.

Why This Course?

- Practical Exposure: Learn by doing with hands-on projects that mirror real-world scenarios.

- Expert Guidance: Gain insights from a seasoned instructor, Pari Margu, who has extensive experience in the field.

- Cutting-Edge Technologies: Explore the latest tools and technologies used in handling big data.

- Community Support: Join a community of like-minded learners and professionals.

- Career Growth: Equip yourself with in-demand skills that open doors to exciting opportunities in data science, analytics, and more.

Enroll now to embark on your journey into the fascinating world of big data processing! 🌟

Course Gallery

Loading charts...