Real Time Spark Project for Beginners: Hadoop, Spark, Docker

Why take this course?

🌟 Course Headline:

Master Real-Time Data Processing with Apache Kafka, Apache Spark, Hadoop & More!

🚀 Course Title:

Real Time Spark Project for Beginners: Building a Scalable Data Pipeline

Course Description:

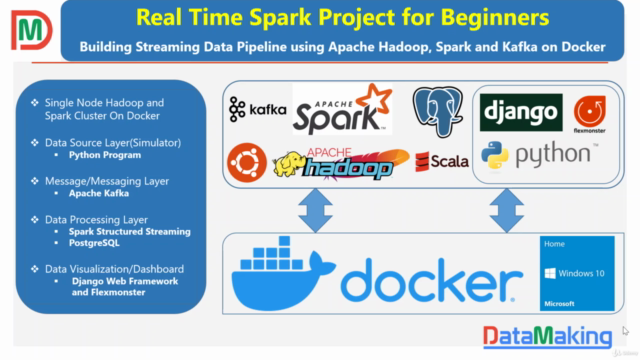

Dive into the world of big data with our comprehensive online course designed for beginners. In this course, you'll learn how to harness the power of Apache Kafka, Apache Spark, Hadoop, PostgreSQL, Django, and Flexmonster within a Dockerized environment to create a robust real-time data pipeline.

What You'll Learn:

-

Understanding the Challenge:

- The vast amounts of data generated by servers in real-time require immediate processing for actionable insights.

- The critical role of a scalable and reliable architecture to handle this deluge of data efficiently.

-

Building Your Pipeline:

- Setting up a Dockerized environment to ensure your project is portable, consistent, and easy to deploy.

- Utilizing Apache Spark with Scala and PySpark on a Hadoop Cluster to manage and process large-scale data.

- Implementing Apache Kafka for its distributed event store capabilities, enabling real-time data streaming and processing.

-

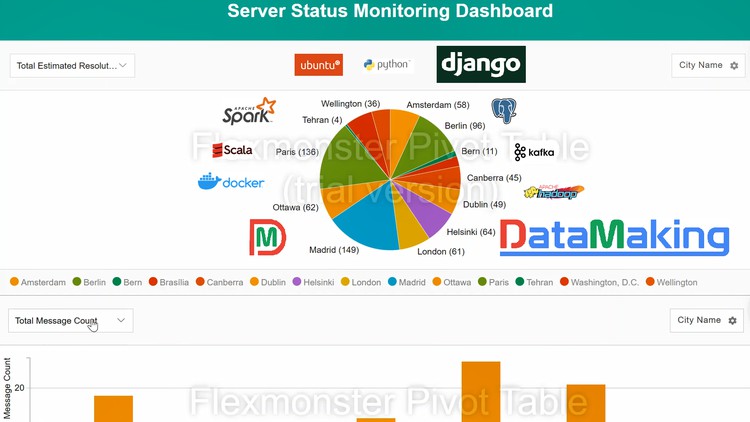

Data Visualization:

- Crafting web applications using Django to serve as a front-end interface for your data visualizations.

- Integrating Flexmonster for advanced and interactive data reporting.

Key Technologies Covered:

-

Apache Kafka:

- Learn how Kafka acts as the backbone for real-time event processing in distributed environments.

-

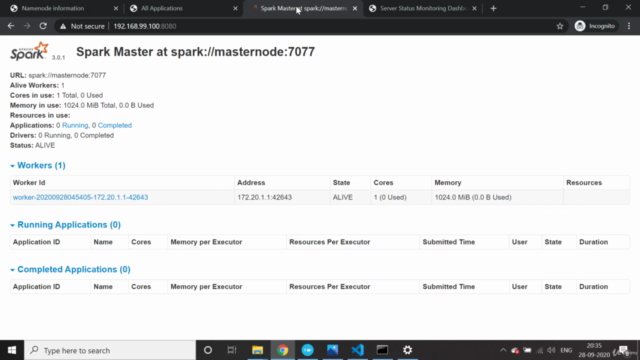

Apache Spark:

- Discover how Spark simplifies big data workloads with its unified analytics engine.

-

Hadoop:

- Understand the fundamentals of Hadoop and how it can be leveraged for distributed storage and processing.

-

PostgreSQL:

- Explore this powerful, open-source SQL database to store your data with reliability and security.

-

Django:

- Utilize Django as a robust web framework to create dynamic web applications that interface with your Spark jobs.

-

Flexmonster:

- Integrate Flexmonster to bring your data to life with interactive reports, charts, and pivot tables.

Why Take This Course?

By the end of this course, you'll have a solid understanding of how to build a scalable real-time data pipeline using some of the most powerful tools in the big data ecosystem. You'll gain practical experience by working with actual datasets and deploying your solutions in a Dockerized environment.

This course is ideal for:

- Aspiring Data Scientists

- Big Data Enthusiasts

- Software Developers looking to expand their skills

- Anyone interested in real-time data processing and analytics

What's Inside the Course?

-

Hands-on Project:

- You'll work on a capstone project that will help you apply what you've learned to build your own real-time data pipeline.

-

Step-by-Step Guidance:

- Detailed instructions and best practices for setting up your development environment.

-

Expert Instructors:

- Learn from industry experts who have hands-on experience in big data technologies.

-

Interactive Learning:

- Engage with real-time datasets and see immediate results as you work through the course materials.

-

Community Support:

- Join a community of like-minded learners to share knowledge, ask questions, and help each other grow.

Embark on your journey towards mastering big data today! 🌟 Enroll Now and transform your career with the power of real-time data processing!

Course Gallery

Loading charts...