Ollama: Beginner to Pro using No-Code & Python Codes

Why take this course?

-

Understanding AI & Machine Learning: Before diving into the specifics of Ollama and LLaMA, it's essential to have a foundational understanding of artificial intelligence (AI) and machine learning (ML). This includes knowledge of supervised vs. unsupervised learning, neural networks, deep learning, natural language processing (NLP), and transformer models like GPT (Generative Pretrained Transformer). developed by OpenAI.

-

Introduction to Ollama & LLaMA: Ollama is an open-source package that enables users to run large language models on their own machines. LLaMA (Large Language Model from Meta AI) is a series of large language models, including different versions like 7B, 13B, and 30B parameters, which are fine-tuned for various languages.

-

Environment Setup: Before you start, ensure you have the necessary Python environment set up with all the required packages installed. This typically includes the transformation models library (transformers), PyTorch, and other dependencies that Ollama and LLaMA require.

-

Installing & Running Models: Learn how to install and run different versions of LLaMA using Ollamma. This includes downloading pre-trained models, setting up the model in your code, and interacting with it using a simple command-line interface or through a more complex application.

-

Interacting with the Model: Understand how to send prompts to the model and receive generated text. Explore different configurations for temperature, max_length, and other parameters that influence the output of the model.

-

Deployment Scenarios: Explore various deployment scenarios, including running models locally on your machine, deploying them on cloud services like AWS, GCP, or Azure, or using Docker containers to ensure consistency across different environments.

-

Web applications with OpenWebUI: Install and configure OpenWebUI, which provides a web-based interface for interacting with LLaMA models. Learn how to set up everything from Docker to the web browser, enabling users to interact with the models through a user-friendly chatbot interfaces.

-

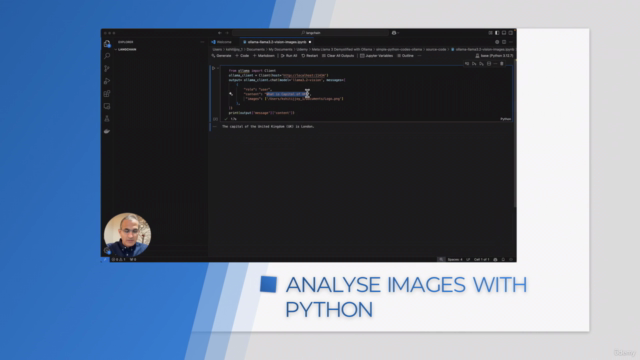

Developing with IDEs: Set up your preferred Integrated Development Environment (IDE) like Jupyter Notebook or Visual Studio Code or Google Colab and use Ollama to write Python code for interacting with LLaMA. Understand how to print required artifacts, handle responses, and possibly use GitHub Copilot to aid in coding.

-

Multimodal Models: Learn about multimodal models, which can process different types of data like text and images. Understand how Meta LLaMA 3.2 Vision Model works and how you can use the Ollama CLI to analyze and interact with images.

-

LangChain & ChatOllama: Introduce yourself to LangChain, a framework for building natural language applications using large language models like GPT-3 and LLaMA. Understand chaining concepts in LangChain, which allows you to create complex interactions by combining multiple steps of processing and output generation.

-

OpenAI Compatibility: Explore the compatibility between Ollama and OpenAI's models if you wish to use them interchangeably. This includes setting up code to work with both LLaMA and GPT-3.

-

Structured Outputs: Learn how to obtain structured outputs from the model, which can be in JSON or another data format that is useful for further processing, such as integrating with APIs or automation tools.

-

Tools Integration: Understand the ecosystem of tools available for use with LLaMA and Ollama, including using APIs for fetching external data like weather forecasts or any other real-time information that can be integrated into the application's workflow.

Throughout these steps, it's important to remember that both Ollama and LLaMA are rapidly evolving tools, and best practices, recommendations, and even the API could change over time. Always refer to the official documentation or community resources for the most up-to-date information.

Course Gallery

Loading charts...