Mastering SVM: A Comprehensive Guide with Code in Python

Why take this course?

🚀 Mastering SVM: A Comprehensive Guide with Code in Python 🎓

🎉 Welcome to the World of Support Vector Machines! 🎉

🤖 What is Support Vector Machine (SVM)? SVM is a powerful machine learning algorithm that excels at classifying and regressing data by finding the optimal hyperplane in a high-dimensional space. It's particularly useful for datasets with complex structures, making it a go-to for applications like image classification, text analysis, and anomaly detection.

🧮 The Magic of SVM SVM works by creating a hyperplane that maximally separates different classes in the data. By transforming the input data into a higher dimension, SVM can effectively separate classes even if they're not linearly separable in the original space. This is achieved by focusing on the most influential points, known as support vectors.

✨ Key Advantages of SVM:

- Flexibility: With various kernel functions, SVM can handle complex boundaries.

- Robustness: It's adept at dealing with datasets that include outliers and noise.

- Generalization: SVM models generalize well to unseen data, leading to more accurate predictions.

- Memory Efficiency: By using support vectors only, SVM keeps memory usage low.

📈 Maximizing Margin: A Path to Better Models The concept of maximum margin is crucial for SVM. It involves finding the decision boundary with the highest confidence, which leads to better generalization and robustness. This approach minimizes the risk of misclassification and enhances the model's ability to handle unseen data.

🔍 Real-World Applications of SVM SVM has a wide array of applications:

- Image Recognition: Ideal for tasks like facial recognition and object detection due to its powerful feature extraction abilities.

- Text Classification: Great for sentiment analysis, spam detection, and more.

- Anomaly Detection: Suited for identifying unusual patterns or behaviors in data.

👇 Understanding the Kernel Trick 👇 The kernel trick is a mathematical technique that allows SVM to work with nonlinear decision boundaries by mapping input data into higher-dimensional spaces. This transformation enables SVM to find linear separations in these new spaces, effectively solving complex problems.

🎯 Margin and Support Vectors Support vectors are the key elements that define the hyperplane. The margin is the space between the hyperplane and the nearest data points from each class. The C-parameter adjusts the trade-off between maximizing the margin and minimizing errors, which is critical for achieving a balance in model simplicity and accuracy.

📊 Nonlinear Classification with SVM SVM's ability to handle nonlinear classification problems is one of its most significant advantages. By leveraging various kernels, SVM can map data into higher dimensions where linear separability is achievable.

🔧 Training and Optimizing Your SVM The training process involves finding the optimal hyperplane through quadratic programming tasks. Efficient algorithms like Sequential Minimal Optimization (SMO) can solve these tasks without needing to explicitly map data to higher dimensions, making them highly scalable for large datasets.

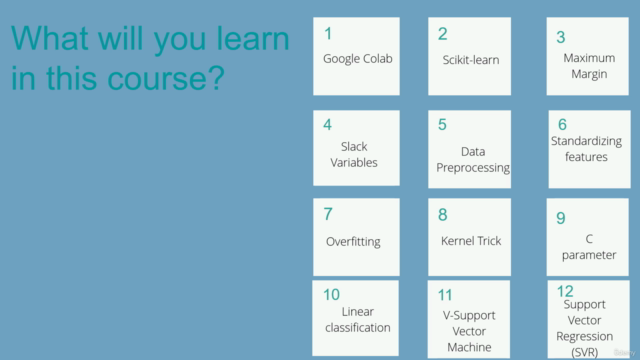

📚 Join Us on This Journey! In this course, you'll dive deep into the theory behind SVM, understand its implementation with Python code, and learn how to apply it to solve real-world problems. Whether you're a beginner or an experienced data scientist, this course will equip you with the knowledge to leverage the full potential of SVM in your projects.

👩🏫 Let's Master SVM Together! Embark on this educational adventure and unlock the mysteries of Support Vector Machines with our expert-led course, filled with insights, practical exercises, and hands-on coding examples to guide you every step of the way. 🚀

Enroll now and transform your data into predictions with confidence! 🎈

Course Gallery

Loading charts...