Apache Kafka Guru - Zero to Hero in Minutes (Mac/Windows)

Why take this course?

Let's explore each section of the Apache Kafka learning journey in more detail:

Section 3 – Apache Kafka Architecture

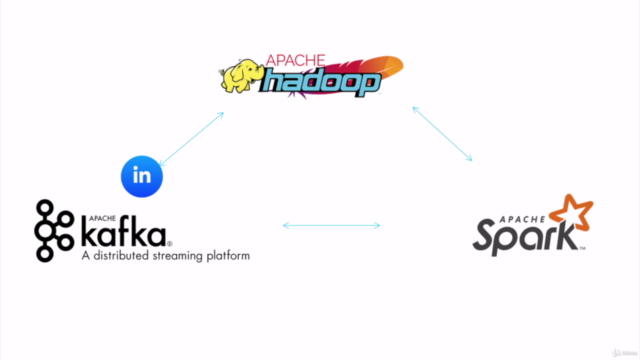

Why we need Apache Kafka? Apache Kafka is a distributed streaming platform that provides a solution for handling high volumes of data and enables the streaming of data between applications. It is designed to be fault-tolerant, scalable, and durable. Kafka solves problems such as building real-time streaming data pipelines and enabling applications to process streams of records in motion instead of batching them.

Section 4 – Anatomy of Apache Kafka

Apache Kafka Architecture Kafka is composed of a distributed collection of brokers that work together to make a Kafka cluster. Each broker is an instance of the Kafka Server and they are typically run on computers in your infrastructure. The architecture is designed to scale both horizontally (more brokers) and vertically (better, faster hardware).

Kafka Broker A Kafka broker is a server that handles a partition of topics. There are three types of brokers: leader, follower, and isolated. Each topic has at least one partition, and each partition is replicated across these brokers.

Kafka Topic Explained A topic in Kafka is a category or feed name to which records are published. These records can be read by client applications interested in the data.

Kafka Topic Partitions Partitions are a way to split a topic into multiple data streams that can be processed and consumed independently. They enable both scalability and fault tolerance.

Kafka Offsets Offsets are unique identifiers for each message record within a partition. They allow consumers to pick up exactly where they left off in the event of failure or rebalance.

How Replication Works in Kafka Each partition is replicated across a set of brokers. One broker from this set is designated as the leader, and the others are followers. The leader handles all the read and write requests for the partition while the followers replicate the data to maintain consistency.

Zookeeper Zookeeper plays a crucial role in Kafka’s operation by coordinating the cluster metadata like topic/partition information, broker locations, and offsets.

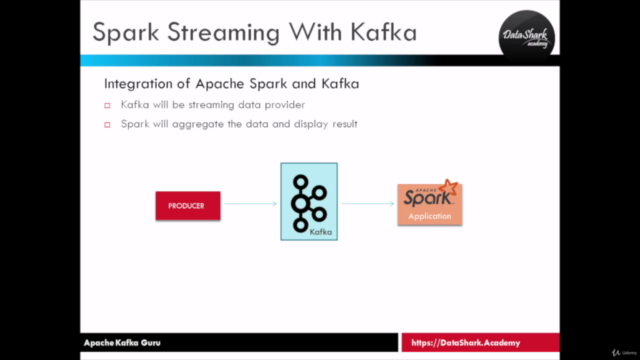

Kafka Producers Producers are applications that publish records to Kafka topics. They handle the task of sending data to Kafka.

Kafka Consumers Consumers are applications that subscribe to one or more topics and process the stream of records that they receive from those topics.

Putting everything together The combination of these components allows for a robust system capable of handling high-throughput data pipelines, real-time streaming analytics, event sourcing, stream processing, and pub/sub messaging between different systems.

Section 5 – Designing Apache Kafka Applications

Designing Considerations When designing Kafka applications, considerations such as data retention policies, consumer group behavior, fault tolerance, and performance tuning are crucial to the success of your application.

Section 6 & 7 – Expert Level and Advanced Hands-On

In these sections, you will get hands-on experience with real Kafka implementations:

Setup Apache Kafka on Local Computer (Mac & Linux users) Learn how to set up a local Kafka cluster on your machine.

Setup Apache Kafka on Local Computer (Windows PC users) For Windows users, specific instructions are provided to set up Kafka in a local development environment.

How to connect to HDP Sandbox terminal (Windows PC users) Connecting to a Kafka cluster from a terminal in the Hadoop Distribution Package (HDP) sandbox.

Let's fire up a local cluster (Windows PC users) Start your local Kafka cluster and verify its health.

How to create some Kafka Topics Learn how to define new topics and understand the implications of topic configuration settings.

Peak inside a Kafka topic Inspect a Kafka topic to see how records are organized and managed.

How to delete a Kafka topic Understand how to clean up resources by deleting topics when they are no longer needed.

Kafka Producers and Consumers Write and run simple producer and consumer applications to understand the end-to-end data flow.

Advanced Hands-On Explore advanced concepts such as consumer group management, handling message serialization/deserialization, implementing fault tolerance with idempotent producers, and tuning Kafka performance for best throughput and latency.

Conclusion

The journey through Apache Kafka involves understanding its architecture, anatomy, and the hands-on experience of working with it. By the end of this learning path, you should be proficient in designing and implementing real-world Kafka applications and handling big data scenarios effectively. DataShark Academy provides a structured approach to mastering Kafka through their accelerated learning programs taught by professionals with extensive expertise in the field.

Course Gallery

Loading charts...