Data Engineering with Spark Databricks Delta Lake Lakehouse

Why take this course?

🚀 Master Data Engineering with Spark & Databricks Delta Lake Lakehouse!

Course Headline:

🌟 "Apache Spark, Databricks Lakehouse, Delta Lake, Delta Tables, and Delta Caching for Scalable Data Engineering"

Course Description:

Embark on a transformative journey into the world of Data Engineering with our comprehensive course designed to equip you with the tools and skills necessary to harness the power of Apache Spark on Databricks' Lakehouse platform. 📊💻

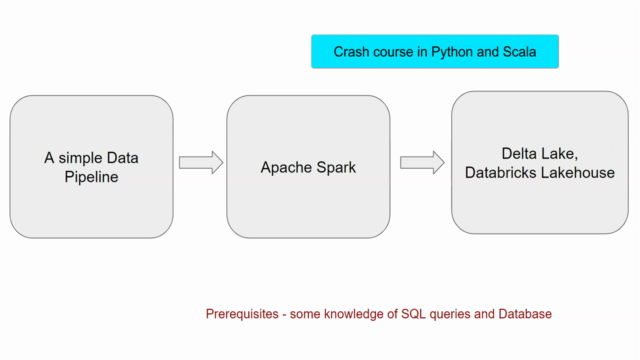

Why This Course? Data is the new oil, and in today's data-centric world, Data Engineers are pivotal in transforming raw data into actionable insights. With this course, you'll not only learn the intricacies of Apache Spark and Databricks Lakehouse architecture but also gain hands-on experience with Delta Tables, Delta Caching, and real-world analytics using Python and Scala.

🎓 What You Will Learn:

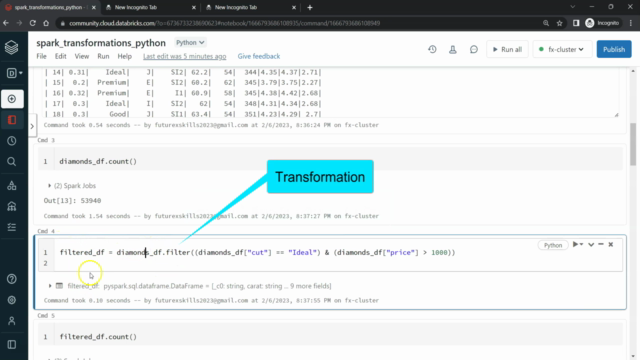

- Data Analytics with Python & Scala using Spark: Dive into the world of data analytics, learning to leverage Python and Scala within the Apache Spark ecosystem.

- Spark SQL and Databricks SQL for Analytics: Master Spark SQL and Databricks SQL to perform powerful analytics tasks efficiently.

- Building a Data Pipeline with Apache Spark: Develop your own robust data pipeline using Apache Spark, the cornerstone of big data processing.

- Proficiency in Databricks Community Edition: Become adept at navigating and utilizing Databricks' community edition to its fullest potential.

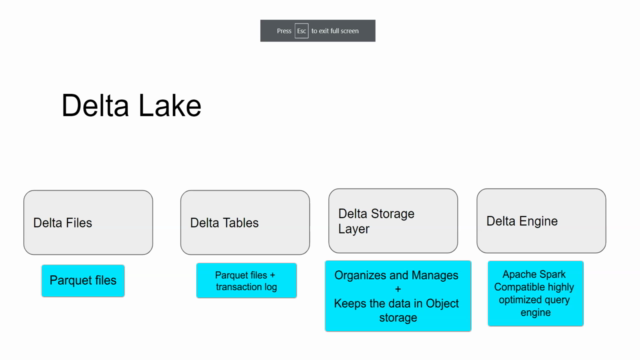

- Delta Table Management: Learn to manage Delta tables, including accessing version history, restoring data, and employing time travel features for a more intuitive data handling process.

- Delta Cache Utilization: Enhance query performance using Delta Cache to optimize your data processing workflows.

- Working with Delta Tables and Databricks File System (DBFS): Get hands-on experience working with the robust features of Delta Tables and DBFS.

- Real-World Scenarios: Gain insights from experienced instructors through practical, real-world scenarios that bring theoretical knowledge to life. 🌐🔍

Course Structure:

- Introduction to Databricks Community Edition & Basic Pipeline Development: Get started with the fundamentals of Databricks and create your first Spark pipeline.

- Advanced Topics: As you become comfortable, explore more complex topics such as analytics with Python and Scala, Spark transformations, actions, joins, and Spark SQL functionalities.

- Delta Table Operations & Optimization: Learn to operate a Delta table effectively, including managing version history, restoring data, and leveraging time travel capabilities using Spark and Databricks SQL.

- Query Performance Enhancement with Delta Cache: Understand how to use Delta Cache to optimize the performance of your queries and improve your data processing workflows.

Optional Lectures on AWS Integration:

- Setting Up a Databricks Account on AWS: Get to grips with setting up and managing your Databricks account within the AWS ecosystem.

- Running Notebooks Within a Databricks AWS Account: Learn how to execute and manage notebooks in a Databricks environment on AWS.

- Building an ETL Pipeline with Delta Live Tables: Explore advanced concepts by building an ETL pipeline using Delta Live Tables, which can further your understanding of end-to-end data processing within the AWS cloud environment.

This course is tailored for beginners in Data Engineering who have no prior experience with Python and Scala but possess some knowledge of databases and SQL. Upon completion, you will be well-equipped to tackle the demands of a real-world Data Engineer role, armed with practical experience and a deep understanding of Spark and Lakehouse concepts. 🎓🚀

Join us on this exciting journey into the realm of Data Engineering and become a part of the data revolution! Enroll now to secure your future in the field of big data.

Course Gallery

Loading charts...