Master Apache Spark using Spark SQL and PySpark 3

Why take this course?

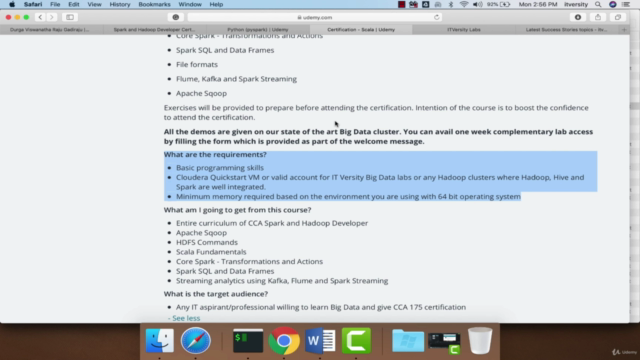

It looks like you've provided an outline for a comprehensive course on Mastering Required Hadoop Skills to build Data Engineering Applications using Python, SQL, and Spark. This course is designed to take you through the various aspects of data engineering with a focus on using Apache Spark for large-scale data processing tasks. Here's a breakdown of what the course covers:

-

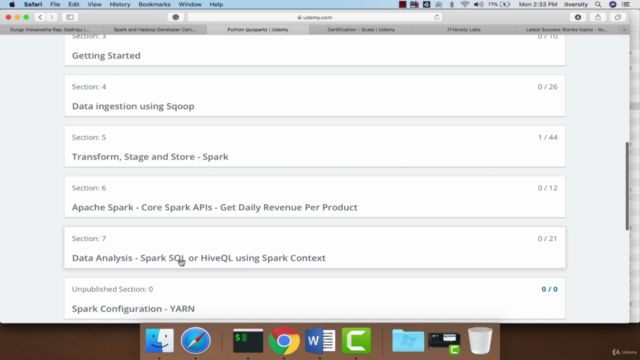

Introduction to HDFS Commands: You'll start by getting familiar with the basic commands used to interact with Hadoop Distributed File System (HDFS), such as

putorcopyFromLocalto copy files into HDFS, anddforduto inspect file sizes. -

Spark SQL Fundamentals: You'll learn how to use Spark SQL for basic transformations and managing tables with Data Definition Language (DDL) and Data Manipulation Language (DML). You'll also explore SQL functions for manipulating strings, dates, and handling null values.

-

Spark DataFrame APIs: This section will focus on data processing using Spark or Pyspark DataFrame APIs, covering column projection, basic transformations like filtering and aggregations, sorting, and data frame operations such as joins and window functions for advanced analytical tasks.

-

Spark Metastore: You'll understand how Spark SQL databases and tables integrate with Spark DataFrame APIs.

-

Development and Deployment of Spark Applications: This part will guide you through setting up a Python virtual environment and project in PyCharm, understanding the complete lifecycle of Spark application development, building and zipping your application, and deploying it to a cluster for execution.

-

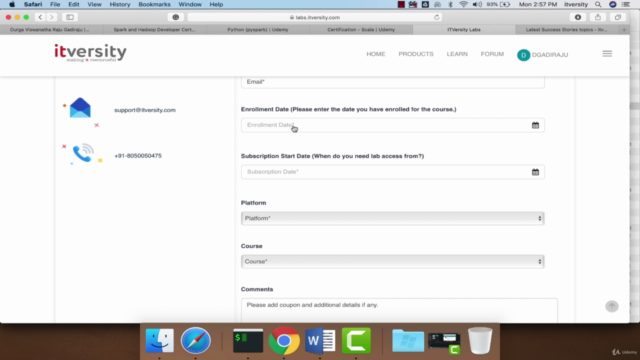

Real-World Application: The demos provided in this course are based on real-world scenarios and are executed on a Big Data cluster. You will also get access to a lab environment for one month to practice what you've learned.

Here are some additional tips to enhance your learning experience:

-

Interactive Learning: Make sure to actively engage with the course material by running code snippets in your local development environment or using the provided labs. Hands-on practice is crucial for understanding and retaining new concepts.

-

Understanding Hadoop Ecosystem: Before diving into Spark, ensure you have a good grasp of the Hadoop ecosystem, as Spark often runs on top of it. Familiarize yourself with MapReduce, YARN, and how Spark differs from these traditional Hadoop components.

-

Python Proficiency: As mentioned in your introduction, a solid understanding of Python is essential for this course. If you're not proficient, consider taking a Python course first.

-

Spark Concepts: Brush up on Spark concepts such as resilience, scalability, and fault tolerance to better understand why Spark is a powerful tool for data engineering tasks.

-

Community Engagement: Engage with the Spark community by attending meetups, reading blogs, or participating in forums like Stack Overflow or the Apache Spark mailing list. This can provide additional insights and help you stay up-to-date on best practices.

By following this course outline and incorporating these tips, you'll be well on your way to mastering the required skills to build robust data engineering applications using Python, SQL, and Spark.

Course Gallery

Loading charts...

Comidoc Review

Our Verdict

The 'Master Apache Spark using Spark SQL and PySpark 3' course truly shines when it comes to providing in-depth knowledge of Spark SQL and PySpark. While there are minor issues concerning setup and accent comprehension, these drawbacks do not significantly impact the overall value. The course excels in its blend of theory and hands-on exercises while ensuring relevance in today's data engineering landscape by focusing on PySpark 3 – making it a worthy investment for both beginners and seasoned professionals.

What We Liked

- The course provides a comprehensive overview of both Spark SQL and PySpark 3, making it a one-stop solution for data engineers looking to enhance their skillset in these areas.

- Real-world examples, hands-on exercises, and theory blend seamlessly throughout the content, ensuring that learners can grasp complex concepts with ease.

- PySpark 3 focus keeps the course relevant, aligning with the current demands of data engineering projects.

- Instructor effectively breaks down complex topics into understandable components.

Potential Drawbacks

- Non-native English speakers may find the instructor's accent challenging to comprehend due to fast speech.

- Instructions for AWS Cloud9 setup are not well defined, leading to confusion and wasting learners' time.

- HDFS commands that are simple or easy to understand can be reduced or removed, allowing more focus on crucial topics.

- Three installation sections could be condensed, with some instructions combined into single sections.