Data Engineering Master Course: Spark/Hadoop/Kafka/MongoDB

Why take this course?

🚀 Data Engineering Master Course: Spark/Kafka/Hadoop/Flume/Hive/Sqoop/MongoDB 📚

Unlock the Power of Big Data with Hands-On Learning!

Course Headline: Dive deep into the world of Data Engineering with this comprehensive, hands-on course designed for professionals looking to master Big Data technologies like Spark, Kafka, Hadoop, Flume, Hive, Sqoop, and MongoDB. 💻✨

Course Overview:

Welcome to the Data Engineering Master Course where you will embark on a journey to understand and master some of the most critical tools in the field of Big Data! This course is meticulously crafted to take you from the basics to advanced levels, ensuring you gain practical, real-world experience.

Section 1: Hadoop Distributed File System (HDFS) 🗂️

Kickstart your learning by getting acquainted with Hadoop's backbone, the Hadoop Distributed File System (HDFS). Learn about its architecture and most common commands you need to effectively manage and interact with HDFS in a variety of scenarios. You'll understand:

- The structure and purpose of HDFS.

- How to navigate, manage, and execute file operations within the HDFS environment.

Section 2: Sqoop - Data Import/Export Tool 🔄

Data migration is a critical task in data engineering. In this section, you'll master Sqoop, a robust tool for transferring bulk data between relational databases and Hadoop. You will:

- Learn the lifecycle of Sqoop commands.

- Understand how to migrate data from MySQL to HDFS and Hive using Sqoop with various file formats, compressions, delimiters, and queries.

- Explore incremental imports to efficiently manage data migration.

Section 3: Apache Flume - Event/Data Collector 🌪️

Next up is Apache Flume, the go-to tool for collecting, aggregating, and moving large sets of log data. You will:

- Understand Flume's architecture and its role in the Big Data ecosystem.

- Learn to ingest real-time data from various sources like Twitter, Netcat, and execute commands, then visualize the results.

- Get hands-on with Flume interceptors, agents, and consolidation mechanisms.

Section 4: Apache Hive - Data Warehousing 📊

Hive is your key to querying and managing large datasets residing in Hadoop using a SQL-like language called HiveQL. You will:

- Get an introduction to Hive and its role in data warehousing.

- Learn about managed and external tables, and how to work with different file formats like Parquet and Avro.

- Understand the use of compression techniques, and explore Hive's analytical and string/date functions, partitioning, and bucketing capabilities.

Section 5: Apache Spark - Fast and General Engine for Large-Scale Data Processing ⚡

Spark is a fast, in-memory data processing engine with an easy-to-use API to enable both batch and real-time computation. In this section, you will:

- Dive into the concepts of RDDs, DAGs, stages, tasks, actions, and transformations that are fundamental to Spark's functionality.

- Work with DataFrames and learn how to manipulate data with different file formats and compressions.

- Apply the DataFrame API and Spark SQL to solve real-world problems.

- Integrate Spark with Cassandra for storage solutions.

- Learn to run Spark jobs on Intellij IDE and Amazon EMR (Elastic MapReduce).

Section 6: MongoDB - NoSQL Database 🛠️

Lastly, we'll cover MongoDB, a NoSQL database that provides scalable storage solutions. You will:

- Understand the structure and use of MongoDB in Big Data environments.

- Learn how to perform CRUD operations using Spark to interact with MongoDB.

Why Take This Course? 🚀

- Real-World Skills: Gain practical experience by working on real-world problems and scenarios.

- Flexible Learning: Access course materials anytime, anywhere, fitting your learning around your schedule.

- Community Support: Engage with a community of like-minded professionals in the field of data engineering.

- Career Advancement: Equip yourself with the skills to advance in your current role or transition into new roles within the Big Data domain.

Embark on this journey to become a proficient data engineer and unlock the full potential of Big Data technologies! 🌟 Enroll now and transform your career with our Data Engineering Master Course.

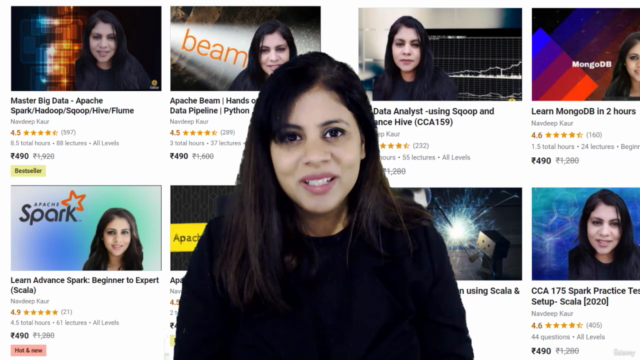

Course Gallery

Loading charts...