Apache Spark In-Depth (Spark with Scala)

Why take this course?

🌟 Apache Spark In-Depth (Spark with Scala) Course Description 🌟

Dive Deep into Apache Spark with Expert Harish Masand

Overview: Get ready to embark on a comprehensive journey through the world of Big Data processing with Apache Spark In-Depth (Spark with Scala) course. This course is meticulously designed by Harish Masand, an esteemed instructor known for his exceptional teaching in "Big Data Hadoop and Spark with Scala" and "Scala Programming In-Depth."

📚 Course Highlights:

-

Foundations to Advanced Concepts: Start from the basics of a word count on Spark, progress through batch processing, and delve into Spark Structured Streaming. Each step is designed to build your knowledge incrementally.

-

Real-World Application Development: Learn how to develop, deploy, and debug Spark applications. Understand the nuances of performance tuning, optimization, and troubleshooting to fine-tune your Spark applications for optimal performance.

-

Comprehensive Learning Pathway: This course provides all the necessary content to conduct an in-depth study of Apache Spark and equip you with the knowledge to ace interviews for Spark roles.

-

Accessible and Clear Instruction: Taught in straightforward English, this course is crafted for learners at any level to follow easily.

-

No Prior Requirements: Although having basic knowledge of Hadoop and Scala is beneficial, this course does not require any prerequisites, making it the perfect starting point for newcomers to Apache Spark.

Why Choose Apache Spark?

🎯 Performance Excellence:

-

Speed: Experience the power of Spark running workloads up to 100x faster than traditional MapReduce programs. This is achieved through a state-of-the-art Directed Acyclic Graph (DAG) scheduler and a query optimizer that ensures efficient execution.

-

Ease of Use:

-

Develop applications rapidly using Java, Scala, Python, R, or SQL. Spark simplifies the process with its extensive library of over 80 high-level operators, allowing for the creation of complex parallel apps and interactive use through various shells.

-

Generality:

-

With a single engine, you can perform SQL queries, process streaming data, execute machine learning algorithms, traverse graphs, and more. Combine these capabilities in one application to tackle diverse analytical tasks.

-

Versatility:

-

Spark is platform-agnostic, running on Hadoop YARN, Apache Mesos, Kubernetes, standalone clusters, or cloud services. It provides access to a wide range of data sources, ensuring that your data processing needs are met wherever you are.

Enroll Now and Transform Your Data Processing Skills with Apache Spark!

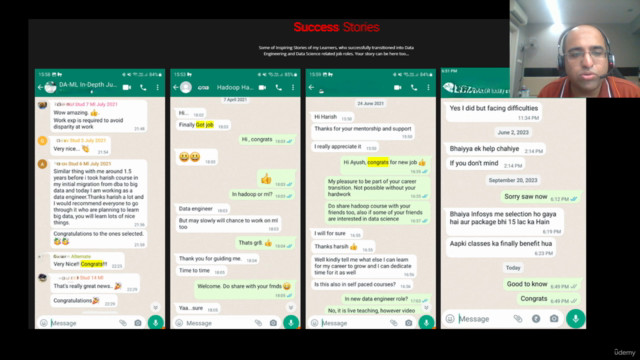

Whether you're an aspiring data engineer, a developer looking to expand your skill set, or simply curious about the capabilities of big data processing engines, this course is your gateway to mastering Apache Spark with Scala. Join us and unlock the power of data at scale! 🚀✨

Course Gallery

Loading charts...